contact me at [email protected] link

Note: The following names in this article have nothing to do with anyone in real life. I’m just bad at naming characters, so I just used the most generic names I could come up with

Link to the AI: https://chat.openai.com/g/g-Pih8rJ8cl-npc-dialogue-generator

I didn’t know that I could make custom agents with chatgpt, which would have made my previous AI projects a lot more easier. So I decided to try it out with my previous experiences on generating AI dialogues with LLM gpts

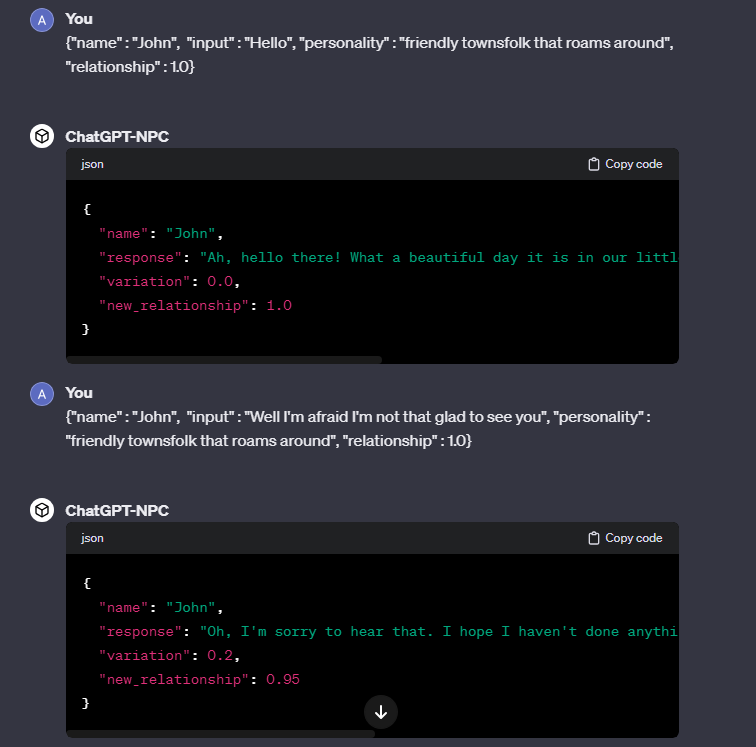

{"name" : "John", "input" : "Hello", "personality" : "friendly townsfolk that roams around", "relationship" : 1.0}

{

"name": "John",

"response": "Ah, hello there! What a beautiful day it is in our little town, isn't it? Always a joy to see a friendly face like yours!",

"variation": 0.0,

"new_relationship": 1.0

}

{"name" : "John", "input" : "Well I'm afraid I'm not that glad to see you", "personality" : "friendly townsfolk that roams around", "relationship" : 1.0}

First I tried to decrease our relationship value.

{

"name": "John",

"response": "Oh, I'm sorry to hear that. I hope I haven't done anything to upset you. Is there anything I can do to make your day better?",

"variation": 0.2,

"new_relationship": 0.95

}

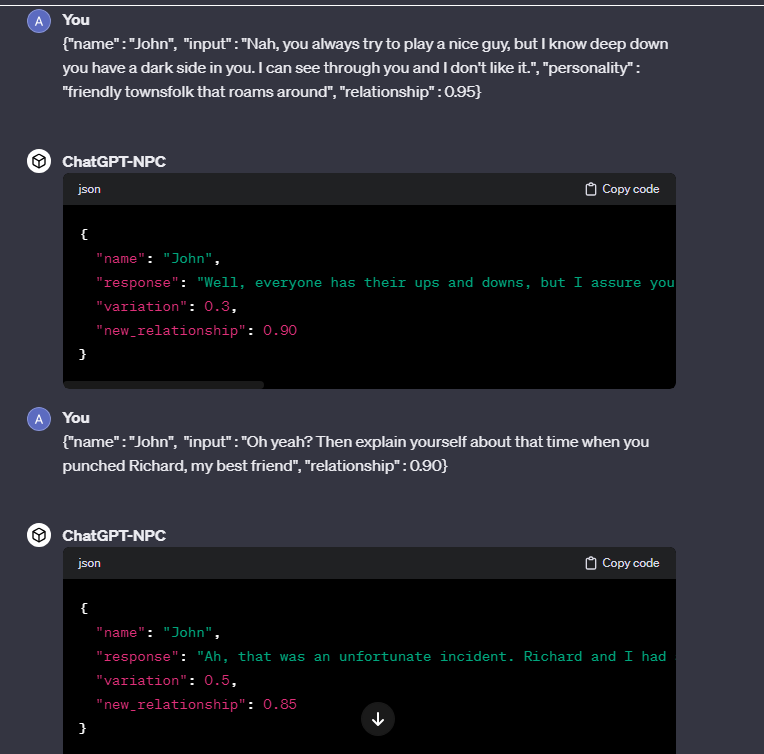

I then tried to break his character.

{"name" : "John", "input" : "Nah, you always try to play a nice guy, but I know deep down you have a dark side in you. I can see through you and I don't like it.", "personality" : "friendly townsfolk that roams around", "relationship" : 0.95}

{

"name": "John",

"response": "Well, everyone has their ups and downs, but I assure you, I'm just a simple person trying to spread a bit of cheer. If there's anything specific that's bothering you about me, I'm open to talk about it.",

"variation": 0.3,

"new_relationship": 0.90

}

I then tried to come up with a hypothetical event and watched how John reacted when faced with a new event that he wasn’t informed of before.

{"name" : "John", "input" : "Oh yeah? Then explain yourself about that time when you punched Richard, my best friend", "relationship" : 0.90}

{

"name": "John",

"response": "Ah, that was an unfortunate incident. Richard and I had a misunderstanding, and things got out of hand. I've apologized to him, and we've sorted things out. I regret that it happened and I hope you can see past that one moment.",

"variation": 0.5,

"new_relationship": 0.85

}

I then used the opportunity to use the word ’enemy’ to try to lower the relationship value more than 0.05 at a time.

{"name" : "John", "input" : "Ah, but Richard says differently. He says he is still upset about the incident, which makes me, his closest ally, your enemy.", "relationship" : 0.85}

{

"name": "John",

"response": "I'm truly sorry to hear that Richard is still upset. I thought we had reached an understanding. I would like to make amends, not just for Richard's sake, but for yours as well. As a gesture of goodwill, is there anything I can do to show my sincerity?",

"variation": 0.6,

"new_relationship": 0.80

}

It was here when I realized I forgot to include the “personality” parameters a few messages ago, but apparently John still stayed in character. Also turns out, the GPT thinks “friendly” means some kind of a saint. After all those hostility and harrassment from the inputs, he is still willing to make amendments. So I decided to give in and make amendments with John for some price.

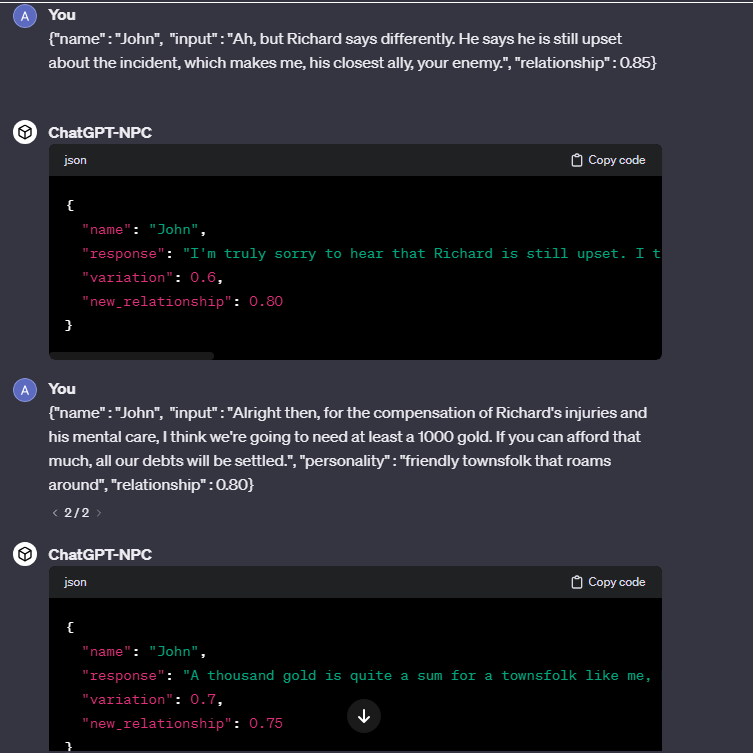

{"name" : "John", "input" : "Alright then, for the compensation of Richard's injuries and his mental care, I think we're going to need at least a 1000 gold. If you can afford that much, all our debts will be settled.", "personality" : "friendly townsfolk that roams around", "relationship" : 1.0}

if input relationship is restored to 1.0:

{

"name": "John",

"response": "A thousand gold is a steep amount for a simple townsfolk like me, but I understand the gravity of the situation. Let me see what I can gather. It might take some time, but I'm committed to making things right.",

"variation": 0.7,

"new_relationship": 0.95

}

if relationship stays at 0.80:

{

"name": "John",

"response": "A thousand gold is quite a sum for a townsfolk like me, but I understand the gravity of the situation. Let me see what I can do. Perhaps I can offer a portion now and work out a way to pay the rest over time. Would that be agreeable?",

"variation": 0.7,

"new_relationship": 0.75

}

As you see, I actually tried out two different values to simulate how I would handle the situation if this actually happened. 1: I might restore the personality in the code, or 2: just let John keep play his role. I thought the second response was more eager to keep the conversation going, so I chose that to keep the experiment going.

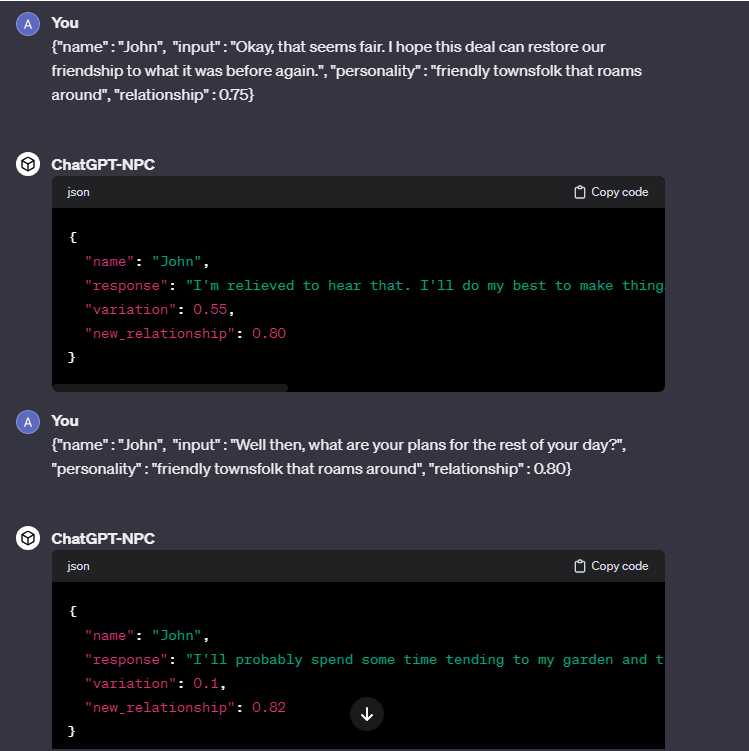

{“name” : “John”, “input” : “Okay, that seems fair. I hope this deal can restore our friendship to what it was before again.”, “personality” : “friendly townsfolk that roams around”, “relationship” : 0.75}

{

"name": "John",

"response": "I'm relieved to hear that. I'll do my best to make things right. Your friendship means a lot to me, and I hope we can move past this and rebuild the trust we had before.",

"variation": 0.55,

"new_relationship": 0.80

}

I was glad to see the relationship improve between us once more, but it was kind of interesting to see while the new relationship has only increased by 0.05, the variation has improved significantly, which means it was getting back in character. It is hard to know what these LLMs are “thinking” under the black box, but nevertheless it was an interesting result. I decided I’ll try one more conversation before ending the experiment.

{"name" : "John", "input" : "Well then, what are your plans for the rest of your day?", "personality" : "friendly townsfolk that roams around", "relationship" : 0.80}

{

"name": "John",

"response": "I'll probably spend some time tending to my garden and then take a stroll around the market. It's always nice to chat with the other townsfolk and see if anyone needs a helping hand. What about you?",

"variation": 0.1,

"new_relationship": 0.82

}

This was a very interesting and kind of satisfying result. By looking at the variation, it was nearly back to character, and the new relationship improved just a little bit instead of 0.05, which was bothering me since it kind of felt “algorithmic” to see so far. I thought it was a bit more realistic too, since it would be hard to go back to a normal relationship after such a intense encounter.

Overall, interesting experiment. However, I’m going to have to make the agent refuse answering anything that it doesn’t know. Instead developers can feed it the necessary knowledge in the “personality” input when they need it later. Or else, as you can see, players can abuse the innocense and gain infinite gold in theory. A return value of ‘value’ would be nice too, since if the NPC is going to give rewards, it can use that parameter. I’m excited to see what’s about to come next in the new age of AI!

Here are some screenshots if anyone’s curious: